The previous post concluded that any sufficiently complex GUI can not realistically be organized into a tree. Although the widgets themselves might form a tree, those widgets will want to attach to a retained model state, composed of mutable objects and their attached metadata.

But Rust functions hate sharing mutable objects for good reason. Shared mutability creates a whole pile of nasty problems, which Arc<Mutex<T>> does little to mitigate. So we arrive at the next part of my series! We will endeavor to break all the difficulties of shared mutability into 3 simple categories (concurrency, invalidation, and dependency) so that we can compare all the various solutions used by other programming languages and frameworks. Hopefully that does something to motivate the choices I made in the my own unnamed framework, which will be presented in the next post.

As in the last post, we are using a counter list as our primary example. But keep in mind, these problems effect all kinds of stateful applications, not just GUIs.

Category 1: Concurrency

Any problem caused by simultaneous access and mutation of state.

Imagine we have a callback written in C, which is intended to increment a counter on click:

void on_click(counter *obj) {

int current_count = obj->count;

int new_count = current_count + 1;

obj->count = new_count;

}

What kind of issues might occur if this code is executed simultaneously on two different threads, or on two different synchronized clients, or while the GUI is rendering a new view state? It is an obvious data race, causing unintuitive behavior at best and totally undefined behavior the rest of the time.

It is relatively easy to maintain the consistency of a single counter like this, within a single memory space. Just use atomic types! But the problem gets harder when larger datastructures become involved, or when updates rely on potentially slow IO operations. For example, we need to make sure the counter list is unaltered while the renderer is iterating over it. Or that changes are not made to a document in memory while it is being serialized out to a file.

Single-threaded Execution

The simplest solution is “just don’t ever do multiple things at the same time.” This is the approach taken by JS, Python, and a surprising number of GUI frameworks. Most GUIs have a main loop that is responsible for handling input and rendering output, so they synchronously alternate between the two. Any multithreading is generally used for rasterization or compositing, not for state updates. Still, it would be nice if our state management solution was multi-thread-able, if only to make programmers lives a bit easier. And anyway, single-threaded execution doesn’t solve the problem in general. Inevitably asynchronous tasks get spawned and awaited inline with state updates, allowing many of the same consistency problems as multi-threaded applications suffer from.

Mutexes

The usual answer to shared mutability in Rust is “wrap everything in Mutex<T>!” Arguably most C++ and Java projects do the same sort of thing. But mutexes have pretty poor ergonomics. And remember, going back to a single thread only replaces the Mutex<T> with an RefCell<T>.

Even worse, mutexes require the programmer to think hard about lock granularity, or risk causing nasty concurrency problems. To see how, imagine a variation of our counter application with fine-grain locks. It has only two integer counts which could be either negative or positive, but their total must always be greater than 0.

let a: Mutex<i32>;

let b: Mutex<i32>;

fn subtract_from_a(v: i32) {

let this = a.lock();

let other = b.lock();

if *this + *other > v {

*this -= v;

}

}

fn subtract_from_b(v: i32) {

let this = b.lock();

let other = a.lock();

if *this + *other > v {

*this -= v;

}

}

If you spotted the deadlock potential here, good job! If the two subtractions are made simultaneously, the first one could lock a while the second one locks b, preventing either from checking the combined total. Since Rust requires us to choose our granularity at definition time, not access time, our only option is to place both counters under a single mutex. But this is too coarse for other code that only needs to access to an individual counter.

These sorts of synchronization decisions are hard to make correctly, hard to debug when they go wrong, and hard to refactor afterwards.

Streams

Instead synchronizing data accesses, many programmers (including myself) prefer message passing whenever feasible. Not only is this a common pattern in Rust, it is fundamental to languages like Go, Clojure, Crystal, and Erlang. When used to parallelize computation, streams of messages might manage a task list or actor system. But in this context, we are less concerned with the destination or source of a steam and more interested in its contents. Specifically, by storing units of state as streams of values rather than individual values, we can make modifications without fear of corruption or deadlock. Just send the new state as soon as it is ready! Unlike mutexes, streams also help us solve dependency problems, discussed in section 3. But streams do have many of the same granularity issues, and create additional consistency hazards besides.

If we simply replace the mutexes in the previous example with streams, the lack of blocking synchronization will enable a nasty bug commonly called “write skew”. If two large subtractions are made simultaneously, each may independently and incorrectly determine that the combined count is positive.

let a: Stream<i32>;

let b: Stream<i32>;

fn subtract_from_a(v: i32) {

let this = a.peek();

let other = b.peek();

if this + other > v {

a.send(this - v);

}

}

fn subtract_from_b(v: i32) {

let this = b.peek();

let other = a.peek();

if this + other > v {

b.send(this - v);

}

}

This is one reason why frameworks such as ReactiveX recommend so strongly against ever pulling values directly out of streams, and instead make use of stream combinators like Filter, Map, Join, Zip, Reduce, and so on. Those will be discussed later.

Transactions

Rather than grouping parts of the state into synchronized units behind a mutex, we can group modifications together into atomic units behind a transaction. It is not very common for ordinary datastructures to use transactions, but it is exactly how databases solve the concurrency problem.

Databases might feel a little out of left-field, given the previous solutions come from programming languages and GUI frameworks. But they have to solve all the same problems being discussed here. What is a database but a collection of inter-related objects which are unpredictably modified as some software receives events?

And the idea of bringing transactions to ordinary application state is not new. The concept, called software transactional memory, was very popular in research circles around 2008. But the performance penalty was fairly high, and the blocking behavior wasn’t superior enough vs mutexes to ever drive mass adoption. Now over a decade later, I feel like the software community is revisiting transactions as a common mode of synchronization. Embedded databases such as SQLite, Redis, RocksDB, and Sled are all increasingly popular for application state management. And instead of blocking the application during conflicting accesses, advances in multiversion concurrency control allow all transactions to proceed lock-free, before being rejected or otherwise merged at commit.

This time around, our positive counting application could look something like:

let a: TxCell<i32>;

let b: TxCell<i32>;

fn subtract_from_a(v: i32) {

loop {

let tx = transaction();

let this = a.load(&tx);

let other = b.load(&tx);

if this + other > v {

a.store(&tx, this - v);

}

if tx.commit().is_ok() {

break;

}

}

}

fn subtract_from_b(v: i32) {

loop {

let tx = transaction();

let this = b.load(&tx);

let other = a.load(&tx);

if this + other > v {

b.send(&tx, this - v);

}

if tx.commit().is_ok() {

break;

}

}

}

Admittedly, this is a lot more verbose. But the retry loop is easy to sugar over, and it doesn’t suffer from any deadlocks or consistency issues!

Transactions offer another benefit to GUI applications specifically: easy undo/redo! It is hard to overstate how painful it can be to implement an undo/redo stack from scratch. Particularly in a language like Rust where awful pointer hacking isn’t an option. Multiversion concurrency control makes it pretty trivial to roll transactions forward and back even after commit, without any weird behavior or dangerous code. In a database context, this is only really used as a security measure. But in our sort of framework, it should be a first-class feature.

Category 2: Invalidation

Any problem caused by state moving around while referenced.

Many kinds of state modifications (particularly to collection types) cause parts of the state to change location in memory, or to be deleted entirely. For example, when the user clicks the red “X” next to one of our counters, we need to free the memory used by that counter object. Additionally, the following counter objects are inevitably shuffled around in some array somewhere.

This would all be fine, except other random bits of code are all holding references to those objects. Parent widgets hold references to their children. Event handlers hold references to bits of state or streams of events. Our counter totals hold references to the individual counts!

Ownership

The simplest solution is to either ban multiple references, or ban mutability. Rust has the best of both worlds! So long as some data is provably immutable, the programmer is free to reference it as many times as they like. If nothing can change, no pointers can be broken. When mutations do happen, the programmer is only allowed a single active reference, which will always be valid after the mutation. Very elegant.

Alas, the previous two posts already pointed out ways in which stateful applications require data which is both shared and mutable. Maybe you can squeeze an input/output loop on top of a tree structure in order to accommodate three alternating owners (render function, update function, parent widget), but at some point more complicated solutions to invalidation-related problems become necessary.

Reference Counting

The first possibility is good old reference counting. Rust and C++ developers can specifically enumerate the co-owners of each object so that it is kept alive until the exact moment all references get dropped. Python also uses reference counting, but since that fact is largely invisible to the programmer, I’m grouping it more with the garbage collected languages in the next section.

Alas, explicit reference counting has rough ergonomics and surprisingly bad performance. No one wants half their GUI code dedicated to calling Arc::clone. It is absolutely doable, but we should look for other options.

Garbage Collection

Garbage collected languages never let objects die, ever. So long as there is some path through the program to that old state, it will still exist. This seems like the right behavior for a state management framework, and it certainly makes GUI frameworks easier to implement. Otherwise how could the JS ecosystem be working on their 800th system for state-management while other languages are barely getting started on the problem? Some of my early prototypes tried to lean on other tracing garbage collectors written for Rust, but none of them beat reference counting on an ergonomic level. Closures seem to be the simplest reason for this, since there is no automatic mechanism for a Rust library to see what objects a closure has captured. But looking back I’m not too upset because garbage collection isn’t necessarily the right solution anyway.

My problem with garbage collection is that it treats deletion as a second-class kind of operation on state. If the user presses that big red X next to a counter, we want to be sure that it and its memory are properly gone. If there is some event listener or callback somewhere not getting unregistered, that is a serious bug, not a nice reason for the memory to stick around. Deletion should have guarantees, same as any other sort of state modification.

Weak References

In the face of unpredictable mutation, Rust programmers almost always fall back on weak references in the form of IDs and indexes. Just put all your state in a big dictionary, store keys instead of pointers!

Weak references are a little annoying to work with, because the programmer must consider what will happen when an object is deleted unexpectedly. While a good system for weak references will guarantee an error in that case (rather than returning some other random data), the error still needs to be handled intelligently. But if we declare deletion a first-class operation like any other mutation, then we must be willing to treat it that way, and write explicit code for handling its outcomes.

I feel my argument is backed up by enormous variety of weak references available across programming environments today, however rarely they get called “weak references”. Relational databases identify rows by primary key, returning an error if a query asks for a row which has been removed. And key-value databases have “key” in the actual name. The whole job of generational_arena is to give you a pointer that knows when it stops working. And what is a dictionary or array but a weak association from one piece of information to another?

My favorite weak references are those provided by the Vale programming language. In Vale, all shared references are weak references. There is no syntactic overhead, the language just checks a version number on your pointers before dereferencing them. And it turns out the same system works amazingly well in Rust (though I still haven’t published a crate for it). For now my implementation works by permanently leaking small heap allocations to use as wrappers around each object pointer. When an object is deleted, the implementation sets the pointer inside to null, so any code passing through the wrapper knows to produce an error. Because we never need enumerate all the references to a given wrapper, each is Copy + Deref + 'static! I can not understate how nice a copyable static reference feels vs cloning Arcs or battling borrowck. The reliance on heap-leaking can waste memory in some cases, but by incrementing a version number inside each wrapper, we can recycle them almost endlessly before needing new allocations.

In Vale, the programmer can avoid constant repeated null checks by leaning on static analysis, and by tethering pointers to the current function scope as soon as the first check passes. We could hypothetically ask the Rust compiler to implement some similar optimizations just for our weak pointers, but by choosing transactions as our preferred concurrency solution, we don’t actually have to! Checking a weak pointer inside transaction provides a new reference borrowing the transaction, which can be fearlessly deref-ed until the transaction is dropped. After all, the entire point of MVCC is to maintain a isolated versions of the whole universe.

And even better, by tracking dependencies between objects in the state (see below), we can avoid being surprised by deletions. When a deletion happens, we should expect relevant code to rerun immediately. No finding out later that something became null while you weren’t looking.

Category 3: Dependency

Any problem caused by lack of awareness of state changes.

When you make a change to your application’s model state, corresponding updates should be made to any part of the widget tree displaying that state. In turn, that needs to cause changes to the rendered pixels visible on the screen. The pixels depend on the model state. An even more direct example of this sort of dependency is the total shown by our counter app in part 2. It must be aware of any changes to the states of the individual counters.

If changes are not correctly propagated through relevant systems, the application will behave unpredictably. Sometimes this is just annoying, other times people can get hurt.

I like to imagine changes flowing through the program like water. When a change is made somewhere “upstream”, all the entities “downstream” of that change need corresponding updates. In some contexts, the upstream object is called the subject and the downstream object is the observer. But while subjects and observers are directly linked, upstream changes can be very distant from their downstream effects. In a GUI, clicking a button is often upstream of some pixels changing color.

Sometimes I wonder if all I’m writing is “the blog post where sam invents lots of terminology”. My rational is that no one really agrees on a consistent terminology for this stuff, and when they do, it rarely captures the trade-offs I’m trying to make. For example, anyone familiar with the Gang of Four design patterns will immediately recognize 3.5 of the 4 solutions below as random variations of the observer pattern. But those variations cover so much design space! It isn’t enough to sy “ah yes, my framework uses observers”. We need to be more specific.

Callbacks

You need to run some code when state changes, so just run that code! Generally each upstream state object has some setter functions and attached lists of callbacks to downstream objects, which each setter iterates over and eagerly executes. Any JS programmer is very used to this solution, but it common in virtually all programming languages. Rust programmers use dynamically-dispatched callbacks less often because it is hard for a Vec<Box<dyn Fn>> to randomly reach out and update arbitrary data elsewhere in the program. But we can also include hard-coded “static callbacks” in this category of solution:

fn increment_the_count(&mut self, parent: &mut Application) {

self.count += 1;

// Don't forget to tell our parent about the change!

parent.update_the_total(self.index, self.count);

}

Static callbacks almost never scale well. If we ask the programmer to manually track dependency, they’ll inevitably screw it up and cause weird unpredictable bugs. But even if the code is completely bug-free, static callbacks clearly promote code spaghettification.

Dynamic callbacks also have crappy ergonomics. Any time a programmer adds a dependency on some upstream state, they need to read through the docs to find the correct callback to register. Sometimes the previous programmer completely neglected to add a callback list to the upstream object, or worse, the callbacks are not reliably called in all cases. Then the new programmer has to waste time refactoring and debugging. Once that is done, they somehow need to share data between the actual downstream code they care about and the callback which keeps it up-to-date. And finally, failing to unregister the callback at the right time risks leaking memory (the lapsed listener problem) or loosing dependency. Gross!

Event Buses

Instead of providing callback functions directly to the upstream object, we can have the upstream object drop notifications into some common bus. Any downstream objects can poll the bus for matching notifications, to decide if an update is required. Event buses are nice because they further reduce coupling between modules. In languages like JS, this prevents import cycles and refactoring annoyances. In Rust it greatly reduces the spread of shared references through the code base. In particular, by queuing events instead of processing them immediately, it is easier to order Rust function calls in a way that provides mutable access to relevant objects.

For example, we might replace this sort of callback logic

let total = Arc::new(Mutex::new(Total::default()));

for _ in 0..3 {

let counter = Arc::new(Mutex::new(Counter::default()));

{

let counter = counter.clone();

counter.lock().button.add_callback("click", move |event| {

let this = counter.lock();

this.count += 1;

this.trigger_callback("change", (this.count - 1, this.count));

});

}

{

let total = total.clone();

counter.lock().add_callback("change", move |(prev_n, new_n)| {

let this = total.lock();

this.total = this.total - prev_n + new_n;

});

}

}

with something more like

fn update_count(this: &mut Counter, event: Click, bus: &Bus) {

this.count += 1;

bus.send(CounterChange {

id: this.id,

prev: this.count - 1,

next: this.count,

});

}

bus.register_state(Counter::default());

bus.register_state(Counter::default());

bus.register_state(Counter::default());

bus.register_handler(update_count);

fn update_total(this: &mut Total, event: CounterChange) {

this.total = this.total - event.prev + event.new;

}

bus.register_state(Total::default());

bus.register_handler(update_total);

Many databases provide this kind of event bus through a publish/subscribe or “pubsub” interface. However, this is generally only useful for propagating database changes over one hop: from the database’s memory to the application’s memory. Another solution is still needed to manage the overall flow of data across many layers of upstream->downstream couplings.

The first disadvantage of event buses in general is that events are less tied down to specific objects. It is on the programmer to fully identify each event so that references can be recovered as needed. Secondly, the semi-random ordering of event handlers tends to create nasty race conditions, which are impossible to reliably reproduce and debug. And thirdly, event buses do not solve all the ergonomic issues plaguing callbacks: events must be reliably sent, handlers must be reliably registered and unregistered, and programmers must manually maintain the link between state changes and corresponding events.

Stream-based Reactivity

The thesis of reactive programming is “you should structure everything else around the unavoidable fact that data changes over time”. No manually tacked on callbacks or event busses, each unit of data needs to be dependency-aware by default! One approach (arguably popularized by ReactiveX) is to present each variable as a collection of values over time. We discussed this solution somewhat in the section on concurrency, but left off discussing operators/combinators. That is because stream operators exist specifically to solve dependency-related problems!

Getting your brain around to this mode of thinking can be a little tricky, but it can be pretty rewarding once it clicks. It makes all dataflow perfectly obvious, and tightly controllable. An extremely simplified version of our counter app could look something like this:

let counters: Observable<Vec<i32>> = events.scan(|old_state, event| {

let mut new_state = old_state.clone();

match event {

AddCounter => new_state.push(0),

IncrementCount(counter, value) => new_state[counter] += value,

}

new_state

}, Vec::new());

let total: Observable<i32> = counters.map(|state| state.iter().sum());

let view = combineLatest((counters, total)).map(|(counters, total)| view! {

for (i, c) in counters.enumerate() {

text(c),

button("-", IncrementCount(i, -1)),

button("+", IncrementCount(i, 1)),

},

button("+", AddCounter),

});

render(view, evets);

I believe that this kind of functional programming can be very elegant when you get used to it. But it is very hard to use for overall state-management because the entire model state inevitably winds up in a single stream. As a demonstration of that fact, try to modify the above example so that it ignores any event which would drop the total below 0. And add that traditional stream-based reactivity frameworks mostly avoid providing first-class solutions for arbitrary references between state.

Anyway, if you are ready to put the whole model state into a single update loop, you might as well use immediate mode. In fact, purely functional languages like Elm are really awesome for building immediate-mode-ish GUIs on top of streams of events! To be clear, I still believe that streams are a great way to handle events. But it is hard to squeeze all the model state into them as well.

Variable-based Reactivity

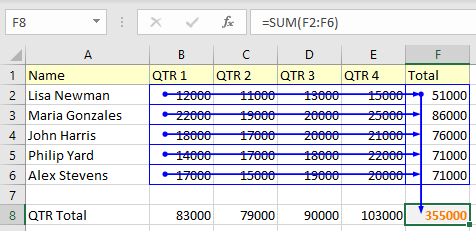

While relatively obscure in mainstream programming, variable-based reactivity is something non-expert users have come to expect from all applications. As best I can tell, the very first computer system to implement reactive programming was LANPAR, back in 1969! From there it made its way into the VisiCalc program and into all the spreadsheet applications we use in the present day:

That thing where your expressions automatically rerun whenever a referenced variable changes, that is what I want! I got used to it when writing VueJS years ago, and then set out to implement it in Rust. I discovered that you need good solutions to concurrency-related problems and invalidation-related problems before you can worry about building a reactivity system, but it is hard to beat the ergonomics of variable-based reactivity once those problems are solved.

Variable-based reactivity is equivalent to stream-based reactivity at some level, but the two have very different interfaces, resulting in very different usage patterns. The dataflow graph in a stream-based reactivity framework is constructed with something like the builder pattern, using operators to combine streams together and declare downstream state. Variable-based reactivity frameworks build the same dataflow graph automatically by inspecting the accesses made by each expression. Some implementations (Excel, DDLog, Svelte) parse the expressions ahead of time, while others (Vue, MobX, my unnamed framework) perform runtime analysis. Either way, the framework can traverse the dataflow graph to propagate changes in the correct order, without buggy human intervention.

Not to be too much of a hipster, but here is a complete implementation of our counter example (including deletion and color) in roughly 30 lines of Svelte:

<script>

let counters = [

{count: 42, color: 'blue'},

{count: 12, color: 'orange'},

{count: 7, color: 'green'},

];

$: total = counters.reduce((a, c) => a + c.count, 0);

function addCounter() {

let color_num = 0x1000000 + Math.random()*0xffffff;

let color = '#' + color_num.toString(16).substr(1,6);

counters = counters.concat([{ count: 0, color }]);

}

function removeCounter(counter) {

counters = counters.filter(x => x != counter);

}

</script>

{#each counters as counter}

<div>

<button on:click={() => removeCounter(counter)}>X</button>

<input

type="number"

bind:value={counter.count}

style={`border: solid ${counter.color}`}

>

<button on:click={() => counter.count += 1}>+</button>

<button on:click={() => counter.count -= 1}>-</button>

</div>

{/each}

<p>Total = {total}</p>

<button on:click={addCounter}>+</button>

A Rust equivalent (as shown at the end of part 2) will require a little bit of macro magic and a whole new library of datastructures. But I think it is possible! If you want to help with the proof-of-concept, ping samsartor:matrix.org ahead of the next blog post.